The Case for Separate Operational and Analytical Models

Why MDM Needs Purpose-Built Structures for Each Audience

Last week, we looked at Designing Master Data Hierarchies That Actually Work. The focus was structure. How hierarchies break when they try to satisfy too many use cases at once. Why clean rollups matter. And why forcing one hierarchy to serve operations, reporting, and planning usually leads to confusion instead of clarity.

That discussion surfaced a deeper issue that hierarchies alone cannot solve.

This week, we step back and look at the models underneath those hierarchies. Specifically, why master data management breaks down when operational and analytical needs are forced into a single structure. The core argument is simple: operations and analytics consume master data in fundamentally different ways. When you design one model to serve both, you end up serving neither well.

Both questions are reasonable. They are also incompatible inside a single model.

That tension is where many MDM programs quietly fail. Not because the data is wrong, but because the structure is wrong.

This article argues for a simple shift in thinking: master data does not have one audience. It has at least two. And each audience deserves a structure built for how they actually work.

Why operational and analytical MDM serve different audiences

If you watch how master data gets used in daily operations, a pattern emerges quickly.

Operational users and systems are not curious. They are busy.

They need to complete work. Create the order. Assign the territory. Validate eligibility. Close the ticket. Every interaction depends on master data being accurate, fast, and stable in the moment.

If the data changes mid-process, systems fail in ways that are hard to debug. If validation is loose, downstream steps break. If response time drifts, users lose trust almost immediately.

Operational master data exists to support action. It lives inside workflows. It must behave predictably under pressure.

Analytics works very differently.

Analysts, BI developers, and data scientists are not trying to complete transactions. They are trying to understand patterns. They want to compare, segment, group, and explain. They care deeply about context and time.

They ask questions that operational systems are not designed to answer. What changed. When it changed. How structure influenced outcomes. Whether the definition used today matches the definition used last year.

Analytical master data exists to support understanding. It needs room for history, enrichment, and iteration.

When these two worlds are forced into one model, something always gives.

Why a single master data model breaks down

At first, the compromise seems manageable.

Analytics asks for a few more attributes, so they get added to the golden record. Marketing wants segments. Finance wants rollups. Risk wants scores.

Before long, the operational master record is wide and heavy. Most applications never use those fields. They still carry them everywhere.

Payloads grow. Validation logic expands. Steward effort increases. Screens fill with fields that mean nothing to the people doing the work.

Worse, derived attributes start to feel official. A segmentation label that was meant for reporting shows up in a service screen. Someone treats it as truth. No one remembers where it came from.

At the same time, the model continues to overwrite values because that is how operational mastering works. Survivorship picks the best current value. History disappears.

That is when analytics starts to struggle.

Dashboards show today’s customer attributes applied to transactions from last year. Revenue gets grouped under the wrong hierarchy. Analysts build side tables and snapshots to compensate. Now there are two truths, neither fully trusted.

Performance issues usually follow.

Operational systems generate frequent, small reads and writes. Analytics wants wide scans and heavy joins. Indexes help one workload and hurt the other. Extracts compete with live updates.

Even if the hardware holds, governance starts to fray.

Operational governance needs to be strict. Mistakes here break real processes and create real risk. Analytical work needs flexibility. Exploration does not thrive behind approval gates.

One model forces one style of governance. Analysts feel blocked. Stewards feel overwhelmed. Exceptions pile up. Teams quietly route around MDM.

At that point, the program still exists, but belief in it is gone.

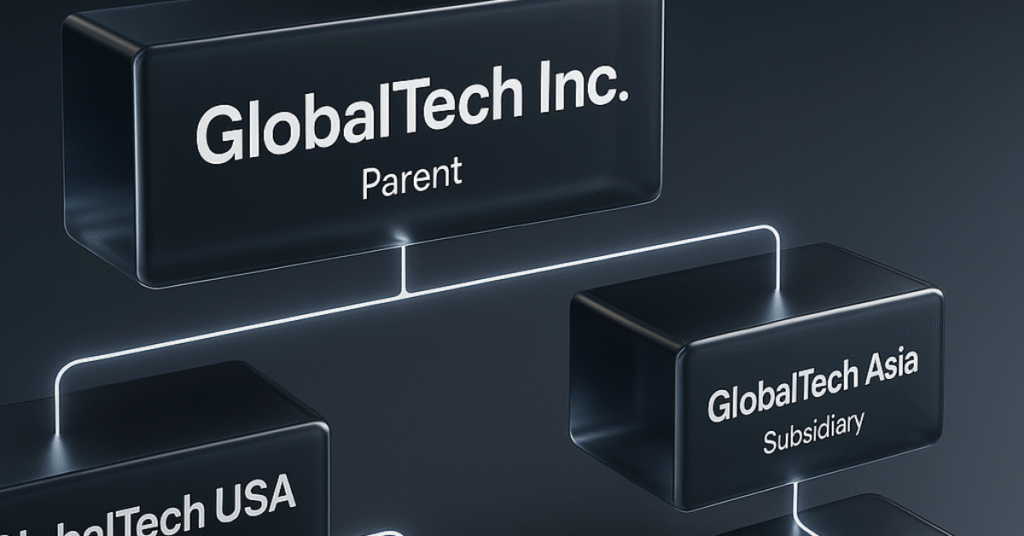

The architectural case for separate MDM models

The problem is not duplication. The problem is asking one structure to do two jobs.

When you separate operational and analytical master data models, you are not creating two truths. You are creating two lenses on the same truth.

The operational model is designed to be lean and controlled. It focuses on identity, survivorship, and the attributes required to run business processes. It supports APIs, events, and steward workflows. It enforces rules at the point where mistakes matter most.

The analytical model is designed to answer questions. It reshapes master data into forms that are easy to join, slice, and compare. It carries history where it matters. It allows enrichment and derived attributes without risking operational stability.

Each model does less. Together, they do more.

Operational vs analytical MDM: a side-by-side comparison

| Area | Operational master data model | Analytical master data model |

|---|---|---|

| Primary purpose | Run workflows | Explain outcomes |

| Time focus | Current state | Current and historical |

| Data shape | Lean, controlled | Broad, query-ready |

| Update pattern | Frequent small writes | Batch or streamed loads |

| History | Limited audit trail | Versioned attributes |

| Governance | Strict approvals | Defined standards, flexible intake |

| Consumers | Apps, ops users | BI, analytics, data science |

| Success measure | Fewer process errors | Trusted metrics |

This contrast is not academic. It explains why so many “single model” designs struggle in practice.

How the models stay connected without chaos

Separation only works if identity stays aligned. That requires a deliberate connection strategy.

Many teams start with batch replication. On a schedule, master data flows from the operational hub into analytics. It is simple and auditable. Latency is usually acceptable for reporting.

As needs grow, teams add incremental techniques. Change data capture streams only what changed. Event publishing lets the MDM hub announce meaningful business changes. Snapshots preserve point-in-time views for finance and regulatory reporting.

Virtualization sometimes appears tempting, but it should be used sparingly. Direct analytical access to operational stores introduces risk that separation was meant to avoid.

The pattern matters less than the principle. Operational systems remain the source for current-state truth. Analytical systems consume that truth in forms suited to insight.

How separation improves data quality and trust

Operational MDM enforces quality where errors hurt most. It prevents bad data from entering workflows.

Analytical MDM reveals patterns that operational views cannot see. Drift. Inconsistency. Gaps over time.

Together, they form a loop.

Analytics surfaces issues. Stewards refine rules. Operations benefit. Trust grows because each audience understands what the data represents and what it does not.

Why separate models unlock better analytics and AI

When analysts stop rebuilding identity logic, they start doing analysis.

Consistent master data makes joins predictable. History enables trend analysis. Enrichment supports modeling.

Data scientists spend less time cleaning and more time learning. BI teams spend less time reconciling and more time explaining.

That is not a tooling win. It is a structural one.

A practical path forward

This does not require a big bang.

Start by defining identity and survivorship for one or two core entities. Deliver operational consumption first. Feed that mastered data into analytics. Add history only where it answers real questions. Let insights inform operational improvements.

Design separation on purpose instead of discovering it by accident.

Why purpose-built models are the real single source of truth

One source of truth is not a table.

It is a shared understanding of identity, meaning, and usage.

Operational and analytical master data models serve different needs. When you respect that, master data becomes an asset instead of a constraint.

Operations get stability. Analytics gets context.

That is not fragmentation. That is maturity.